We all take things for granted. Okay, some more than others, but we all do. When someone asks you a question about something that is so obvious to you, you’re left speechless. You simply cannot find the proper explanation for something that is, just because it is.

This happened to me this weekend. I was explaining depth of field to someone and how it is affected by different apertures. So far, so good. Until the person asked: “but why does a different aperture give a different depth of field”? It’s always these “why” questions that get you into trouble. I managed to get away with it at the time by saying not to bother why, but simply accept it does. But afterwards I gave it more thought. I was sure I learned the principle behind it once, but could not recall it exactly anymore. Why does a different aperture give a different depth of field? Fortunately we’re blessed with Internet and Google these days, so it didn’t take me long to find out why it is as it is. Although normally outside the scope of this site, I’d like share my regained wisdom with you for a change.

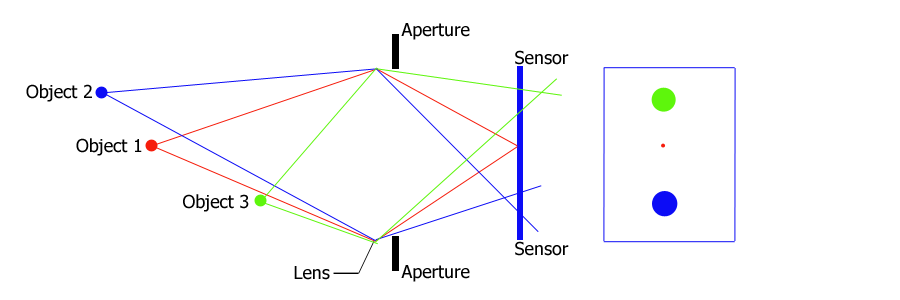

Let’s say I want to take one picture of three objects which are standing more or less in line of each other. I focus on the middle one to make sure it is sharp in the resulting image. Let’s say we use full open aperture, f/2 or something similar, which, as we all know, will give us minimal depth of field. But why? Let’s look at the drawing below to understand.

Because we focused on Object 1 the internal focal point will be on the sensor (or film for analog cameras). The distance between the camera and Object 2 is longer than with Object 1 and in case of Object 3 it is shorter. Therefore, the internal focal point will actually be before and after the sensor respectively (as the drawing illustrates). This causes the so-called circles of confusion. They refer to the areas on the sensor after the focal point (Object 2) or before the focal point (Object 3). As a result we see these elements blurred on the final image. Only Object 1 will be spot on.

Because we focused on Object 1 the internal focal point will be on the sensor (or film for analog cameras). The distance between the camera and Object 2 is longer than with Object 1 and in case of Object 3 it is shorter. Therefore, the internal focal point will actually be before and after the sensor respectively (as the drawing illustrates). This causes the so-called circles of confusion. They refer to the areas on the sensor after the focal point (Object 2) or before the focal point (Object 3). As a result we see these elements blurred on the final image. Only Object 1 will be spot on.

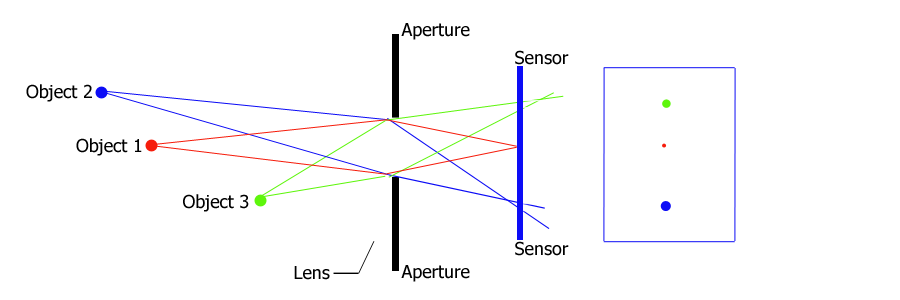

Now let’s see what happens if we’re going to use a much smaller aperture (to give better depth of field). If we used f/2 in the previous example, let’s use f/16 now then. Let’s bring in the same drawing, that is, almost the same drawing.

What we see here is that, as a result of the smaller aperture, the internal focal points of Objects 2 and 3 are much closer to the sensor which gives much smaller circles of confusion. On the final image Objects 2 and 3 will appear to be in focus. Please note the delicate wording here: they appear to be in focus. In fact they are not. Object 1 is the only that’s in focus, but because the blurring is imperceptible, we see them as in focus. Strictly speaking they are not. And that is how aperture and depth of field are related. Larger apertures cause larger circles of confusion, resulting in blurred objects in the final image. Smaller apertures cause smaller circles of confusion. Mind you, this still results in out of focus objects, but the blurring is not noticable to the human eye.

What we see here is that, as a result of the smaller aperture, the internal focal points of Objects 2 and 3 are much closer to the sensor which gives much smaller circles of confusion. On the final image Objects 2 and 3 will appear to be in focus. Please note the delicate wording here: they appear to be in focus. In fact they are not. Object 1 is the only that’s in focus, but because the blurring is imperceptible, we see them as in focus. Strictly speaking they are not. And that is how aperture and depth of field are related. Larger apertures cause larger circles of confusion, resulting in blurred objects in the final image. Smaller apertures cause smaller circles of confusion. Mind you, this still results in out of focus objects, but the blurring is not noticable to the human eye.

In the small research I did for writing this entry, I noticed the thousands of articles on depth of field that live on the Web and almost all of them simply state that wider apertures give you lesser depth of field. Which is true of course, but only few articles tell you why. Here’s a contribution to the latter category.

Hi,

was just wondering about the geometric optics between the objects of a photography and the detector array. Thanks for that.

But now I am wondering about how can you reconstruct the object from what the detector array detects. So many possibilities of light angles entering in the camera and position of the objects in 3D that a specific pixel cannot really know where the object is. Let’s say the aperture is small (2nd example)… then if the objects 2 is further from the lens but at the same position in the plane the rays won’t hit the same pixel… for example, if we take a family photo with trees in the background, they’ll be shifted on the image compared to the real object (tree) position?

Hope I’m clear. Let me know!

Good job BTW